Ethical Issues in Artificial Intelligence Algorithms

Table Of Contents

Introduction

Over the past few years, AI has become a major part of our daily lives and our society. It has impacted almost every major industry including healthcare, motor vehicles, and business. It has helped companies hire and manage employees and do various tasks. With all this growth there are some ethical issues that arise. Some of these include errors, bias and how much is known about these algorithms. Just like almost any computer program, AI is prone to errors, one must ask themselves how to handle these errors, how to mitigate them and who is responsible for them. These issues will be described, debated and discussed.

Firstly, what is an algorithm? In its simplest form an algorithm is a set of instructions that take some input and produces an output. (Martin.K ) To give an example of an algorithm, a simple algorithm might take in a number and then output the number plus one (this is a very simple example). If you give it a two it will return you a three. Another example would be an algorithm that takes in a number and then gives you that number back plus a random number between one and ten. If one gave it an input of 2 it might return four or five or six, etc. For a given input it may not always return the same output.

Next let's answer the question, what is Artificial Intelligence (AI). AI typically is broken down into two categories, strong AI and weak AI. (Siau, Keng ) Strong AI or general artificial intelligence is when you can do general tasks like a human would. They would be able to think and apply knowledge learned in one area to another. This is Much like human behaviour. (Bostrom, Nick) This is currently not achieved. The other type is weak AI. This is when an AI algorithm learns to do a specific task like classification of images, predicting weather or playing chess. (Bostrom, Nick) Although the notion of general artificial intelligence brings up a lot of ethical debates it will not be the focus in this report. Instead, the focus will be in week AI and the ethical issues that arise.

AI has been used in many fields. It is the driving force behind self-driving cars, decisions in the justice system and the hiring process. In self driving cars data is prepared and then used to train a computer how to drive. Deep learning and neural networks are often used to achieve this task. (Qing Rao and Jelena Frtunikj) One example of this in action is uber. Uber is currently using and testing self-driving cars in various states including California and Arizona. COMPASS is an AI algorithm that decides whether or not an inmate will get paroral. It takes in data such as the inmates’ behaviors, criminal history, parental criminal history and many more data points and then outputs an answer. (Martin, K.) AI is also widely used in the hiring process. It is used to narrow down the number of applicants in the search as well as run ads to people showing them jobs. (Bogen, Miranda.) All of these jobs were once typically done by humans but are being replaced by algorithms. As this replacement occurs ethical issues can arise. Some may choose to ignore or dismiss these issues, but it doesn't change their presence. (Siau, Keng & Wang, Weiyu.)

This report aims to outline the ethical issues involved in AI. It will outline the issues of bias, where it happens, how it happens and the consequences and challenges. It will also discuss how the issues of understanding the AI and transparency involved (or the lack thereof). Finally, the issue of Accountability and Responsibility is discussed it will outline who should be accountable for when errors occur and the gaps that exist in the law.

Description of the issue

Bias occurs when an algorithm treats a person or group of people in a way that is unfair. One major factor that attributes to bias in algorithms is data. The data is collected from human behavior and as we all know humans can be biased. This bias is then learned by the AI algorithm and has unwanted results. Some of these biases can include biases towards a particular race or gender. (Siau, Keng & Wang, Weiyu.) Bias in AI algorithms can have many unwanted outcomes such as having a hiring algorithm that factors men (Dastin, Jeffrey) or a judiciary algorithm that favors white people. (Martin, K.)

How can algorithms become bias is that they learn from historical data. Then all the bias that was in that historical data will be learned by the algorithm. Another reason is that a company or institution could modify the data to favor certain behaviors from the algorithms. This is called institutional bias (Bogen, Miranda.) A recent study found that most hiring algorithms where found to be “Bias by default” even if measures were taken to remove bias data points out of the data (Bogen, Miranda.)

When developers or firms fail to understand their AI algorithm this can also have a negative impact. AI algorithms and the underlying machine learning algorithms can be extremely complicated. As a consequence, it could be very hard to understand why an algorithm did what It did. (Bostrom, Nick and Eliezer Yudkowsky) For example if one was using a complex neural network or a genetic algorithm and one wanted to know why it predicted what it did it would be very difficult to explain from just looking at the specific weight and bias matrices. This can be an issue because these are used for classification of inmates (in the COMPAS example) or used to analyze resumes and recommend someone to hire if there is an unwanted result the inmate or the person getting hired have a write to know why they failed. This is not presented to them when an AI algorithm is used. Using a decision tree algorithm can help mitigate this issue as it is easier to inspect and see what is happening. (Bostrom, Nick and Eliezer Yudkowsky) On the other hand AI algorithms should be robust against human manipulation. A human should not be able to change the factors slightly to get the outcome they want and not the desired outcome. This is a balancing act. On one side one wants to be able to see what is happening and on the other side you don't want the algorithm to be influenced. (Bostrom, Nick and Eliezer Yudkowsky)

When the AI algorithm makes a mistake, who is responsible for that mistake. Is it the organization's responsibility? Perhaps the developer? Or maybe the user of the AI algorithm? Who is responsible for the inevitable errors of the AI algorithm (Bostrom, Nick and Eliezer Yudkowsky.) If no one is held accountable how can we place blame on someone when a self-driving car hits a pedestrian? This could be a programming error, data or a variety of other variables.

Major challenges and impact

Bias

Bias is a challenging issue to address in AI algorithms. It can cause unwanted and unethical results. One example of this was when the COMPASS algorithm falsely denied someone parole. This person was a model inmate; they had a job lined up when they got out of prison as well as no infractions and good behavior. This person was denied from the COMPAS algorithm described earlier. (Martin, K.) This algorithm was biased towards people of colored skin specifically, black. When looking at the errors the algorithm made, it falsely labeled almost twice as many black people as high risk compared to when it was labeling white people as high risk. On the other side, it falsely labelled almost twice as many white people as being lower risk. (Martin, K.) This bias exists in these algorithms and when left unchecked leads to unethical behavior such as people being falsely denied parole. It can lead to facial recognition only working on certain races and search results enforcing gender stereotypes.

Having an algorithm making these choices, in principle, sounds like an excellent idea. It would be ideal if we could use an algorithm and take the human bias out of the decision-making process. (Martin, K.) Unfortunately, what causes this bias is humans. Human behaviors that come in the form of data are fed into the algorithms and then are learned by them. The unfortunate truth is that historically, humans do not always make unbiased moral decisions and this unethical behavior is learned (Siau, Keng & Wang, Weiyu)

When developers are designing these algorithms, they have a specific use case in mind. They make the algorithm to do one task. The developers are in charge of designing the scope of the problem and how it will be modeled. They get to choose what the algorithm takes as its inputs. Some of these inputs can cause biases to occur. If they choose to include things that are out of the person's control it can lead to unwanted results. For example, in the case of the COMPAS algorithm the input includes the person's parental criminal history. (Martin, K.) When these types of inputs are included it can further the bias and make it harder to detect.

Transparency

This ethical issue occurs when companies or firms do not provide information about why an algorithm produced certain results. When we trust algorithms to do things like hire people or drive our cars, one might want to know why it makes certain decisions. On the other hand, if this information is given out it will be more vulnerable to malicious attacks. (Burt, Andrew.) It’s up to firms and companies to decide how they manage this risk.

Guidelines were recently published in the EU. These guidelines' goal was to set requirements for trustworthy AI. There were 7 requirements in total and Transparency was a key requirement. The EU started using the strategy in the middle of 2018. These guidelines were recognized and used by over 350 organizations in late 2019. A lot of these guidelines were covered by current legislation in the EU but they help fill in the gaps when it comes to transparency, traceability and human oversight. (Larsson, Stefan and Fredrik Heintz.)

In regard to transparency these guidelines specifically note that an AI must have these key features; communication, traceability, and explain ability. Communication is concerned with communicating that the users of the AI are in fact using an AI as well as giving them a human alternative if they choose so. Further the limitation of the AI should be communicated to the end user. Traceably means that the process of collecting the data, labeling the data and the AI algorithm used should be documented. This ensures that it can be inspected later and prevents repeated mistakes. Explain ability means that the algorithm's decision-making process can be well explained in a way that is human readable. It also should outline the human made decisions. This is to ensure that a user of the algorithm can have a fair explanation to why it did what it did. (“Ethics Guidelines for Trustworthy AI.”)

This issue is particularly important when making critical choices such as who to hire or who to put in jail. Hiring algorithms are used to streamline a company's hiring process. The issue is if an AI algorithm is used, this decision or rating can often be missing an explanation for why it decided what it did. (Gutierrez, Daniel) This can leave the company open to legal issues as the law requires them to give an explanation to people who were not hired. It is not ethical to make a decision and not have any reasoning to back it up.

Another example is in the risk analysis of criminals. These algorithms are often used to give one a risk rating. The issue is there is no explanation provided to why it chose what it did. This is problematic as there is often bias in these algorithms. (Gutierrez, Daniel)

When making decisions such as who to hire or who to put in jail it's very important to make sure there is no bias and then if there is bias, we need to be able to deduct why the bias is happening. This can only happen if the algorithms are transparent.

Accountability

When, inevitably, an AI algorithm makes mistakes one will want to know who is responsible for this mistake. Looking at the healthcare industry, we observe that AI is developed in house then that healthcare institution is responsible for its effects. If it makes a mistake labeling a cancer patient and they fail to catch it, they are responsible. (Stotler, Laura.) On the other hand, if they purchase the product commercially then who is at fault when it fails? If the AI algorithm being used is not mandated by the state, then we must turn to the federal law. In this case the healthcare facility would still be responsible for the AI algorithm's mistakes. (Stotler, Laura.) From this example we can learn that one should always be vigilant and question the output of an AI algorithm and make sure that they are following the law when doing so.

An example of a case going to court is when Samathur Li Kin-kan sues Tyndrais for 23 million dollars in damages. Allegedly, a salesperson at Tyndreais convinced Samathur Li Kin-kan to transfer and use some of his money as a fund that was managed by an AI algorithm. (Beardsworth, Thomas.) The AI algorithm, called K1, managed a portfolio worth 2.5 billion dollars (Thompson, Kirsten.) with 250 million being Samathur Li Kin-kan’s money and the rest coming from Citigroup Inc. Their goal was to grow this over time. (Beardsworth, Thomas) Samathur Li Kin-kan is suing Tyndrais because he claims that the salesperson made false claims about the AI capabilities that were sold to him. K1 did end up losing him a significant amount of money and on one particular day losing him 20 million dollars. Samathur Li Kin-kan claims it was grossly overstated what the AI was capable of. ( Beardsworth, Thomas )

Ethical issues arise as we cannot see who is responsible for the errors in the AI. In this case the AI was a black box, and it was not transparent to why or how it made its decisions. On the flip side Tyndaris is suing Samathur Li Kin-kan for unpaid fees ( Beardsworth, Thomas ).

Looking at the laws around this issue, in particular, in Quebec we can see that Article 1456 of the Civil code of Quebec states that “the custodian of a thing is bound to make reparation for injury resulting from the autonomous act of the thing, unless he proves that he is not at fault.”. It is hard to see if this applies to AI. One would first show who the custodian is. In this case it may be Tyndaris or it could be 42.cx, the company who developed K1. Tyndaris then used K1 and applied it to managing funds. (Thompson, Kirsten.) The generality of laws surrounding AI make it particularly hard to enforce them.

Looking more generally at national common law, we see that there is no rules or laws in regard to ‘acts of a thing’. In this case they might have to prove that Tyndaris had a “duty of care” for the AI and then use “tort of negligence”. (Thompson, Kirsten.)

Overall, we can see that the laws surrounding these issues are very general. This makes it easy for companies to get away with behaving in an unethical manner with receiving little or no repercussions. This is the first case of its kind and although it is still ongoing the outcome may set a precedent for future cases.

Hiring algorithms

Among many disciplines AI algorithms are used in the hiring process. They can be used in the hiring process as well as determining what ads are shown to what people. While this use may sound harmless the ethical issue of bias can quickly become a problem. (Bogen, Miranda ) In 2019 a study of Facebook’s ad system was conducted and it showed a lot of bias and a skewed displaying of ads. This study showed that 75% of ads for taxi drivers were shown to a black audience. Another result was supermarket jobs were shown to mainly women (making up more than 80%). (Ali, Muhammad ) Facebooks goal was to show relevant adds to users but it ends up being bias. Doing this enforced gender and racial stereotypes that already exist in our society. Further these algorithms are often also more concerned with who will click the add then who is a good candidate for the ad. This is institutional bias and can contribute to existing biases in the target adds process. (Bogen, Miranda)

AI algorithms are used at the hiring level. They are often used as a way to narrow down the number of candidates by hiring an AI algorithm to read resumes and make decisions. These may include giving a rating or recommendation that only certain candidates continue. Many companies claim that this is not the only tool they use, and they do not reject candidates based on this alone. (Bogen, Miranda) The issue occurs again when these algorithms become biased. Sometimes they might rank male candidates higher over a similarly qualified female and this of course is extremely unethical. In the US the Uniform guideline of employee selection process states that employers are “obligated” to test their selection tools for bias and ensure that it is not biased towards a certain group. (Bogen, Miranda) This can be done by removing certain characteristics (i.e. gender or race of the candidate) from the data but unfortunately due to the nature of AI if bias exists in the data it will still show in the output. Firms need to go on what is required by the government and check their data sources for bias and rigorously test their AI algorithms after they are created.

Example: Amazon

An example of this in action is amazon's hiring algorithm. Amazon received many resumes each day and the amazon team at Edinburg wanted to cut down on the amount of human labor involved. AI was often used in many parts of their business, so it was a natural conclusion to add it to the hiring process. They sought to design an AI algorithm that reviewed resumes and gave them a rating out of five. (Dastin, Jeffrey) They would then use this rating to filter the candidates. The team made over five hundred models and trained it on over fifty thousand parameters. After the creation, Amazon teams started using the AI algorithm to rank resumes in 2014. A year later in 2015 they started to notice that the algorithm was not ranking folks in a way that was fair. It was biased towards women and consistently ranked men higher even though they had similar skills and requirements. (Dastin, Jeffrey.) The Amazon team made efforts to de bias the data but ultimately it did not work. The algorithms would pick up on male language even though it was not specifically told that the candidate was a male. It would also pick up on things like “women's chess club” or an all-women’s school. Since the algorithm was based on the past 10 years of hiring and most of the people hired were male it would recognize these female terms as being negative. Amazon claims it never used this algorithm as a single source of truth but only as a tool to make the hiring process easier. The project was abandoned a year after it started in 2015. Amazon was not breaking any laws as “reasonable measures” were taken to debias the data and since they also claimed it was not a single source of truth. This is a gap in the law as “reasonable measures” is hard to detect and it is also very hard to detect whether companies are using these algorithms or not.

Often researchers want to try and test platforms like amazon, LinkedIn or others for bias. The goal of much of this research was to detect gender and race bias. The issue with this is that up until recently there was a fear of the Computer Fraud and Abuse Act. The Computer Fraud and Abuse Act (CFAA), an American law, made it illegal for one to violate the terms of service of the website you are using. This often deterred the act of making “fake” accounts in order to detect bias in an algorithm. (Derek B. Johnson)

There was a breakthrough in this law when the American Civil Liberties Union (ACLU) won a case that allowed this use. The ACLU argued that the CFAA was vague and would violate their 1st and 5th amendment rights. The judge agreed with them and they won the case in late March of 2020. (Derek B. Johnson)

Example: Ewert vs Canada

In Canada, the Correctional Service of Canada uses 5 different algorithmic tests to give a risk analysis to inmates. This risk assessment is a rating on how likely someone is to re-commit a crime if they were not in prison and is often used in parole hearings and other judiciary decisions. (Amy Matychuk) Issues can arise when these algorithms are biased towards a race, gender or culture. This can result in unfairness in who receives parole and when.

This was the case for Jeffrey G Ewert. He was a federal inmate in Canada and was an indigenous man. A member of the Métis group. He claimed that these 5 tests were biased towards indigenous people. His legal actions spanned over 18 years and resulted in over 5 written documents that contained information about how indigenous people are treated unfairly by these algorithms. (Amy Matychuk) The issue was finally brought to the attention of the Supreme court of Canada on June 13th 2018. They came to the conclusion that the Correctional Service of Canada was breaking the law in a 7-2 vote. Specifically, they were violating Correction and Conditional Release act which states “take all reasonable steps to ensure that any information about an offender that it uses is as accurate, up to date and complete as possible” (Beatson, Jesse ) This is an important case as it can set a precedent for future cases of this nature and lead to a better and more fair correctional system in Canada

Example: Uber

In March of 2018, on a Sunday evening, an uber car was in self driving mode with an observer while driving in Arizona. Arizona had let uber test their self-driving cars and this was to attract more business to their city. The Volvo XC90 was in autonomous mode when it struck and killed Elaine Herzberg, a pedestrian who was jaywalking across the street. This car was traveling around 40 miles per hour. Although there were previous incidents where self-driving cars were involved this was the first person to be killed by a self-driving car. (Wakabayashi, Daisuke) The ethical issue that arises is whose fault is this? Uber settled the case between them and the victim's family only a few weeks after the crash for an undisclosed amount. (McFarland, Matt.) Despite this there was an investigation that happened but ultimately it took over a year for the legal case to settle. Rafaela Vasquez, who was a contractor for uber and was the person driving the car was ultimately at fault. She was charged with negligent homicide Vasquez was on her phone at the time of the incident. She was watching tv and didn't see Herzberg crossing the street. It was her duty to take over the wheel in the event of an emergency and she failed to do so. (McFarland, Matt.) Vasquez pleaded not guilty and the case is still ongoing at the time of writing this.

Uber was fortunate with this outcome as if Vasquez had not been charged this could have been a challenging case for uber. The emergency braking system was disabled to ensure a smoother ride and Uber was trusting the driver to take over in the event of an emergency. (McFarland, Matt.) The emergency braking system detected Herzberg but did not label her as a human and since the system was disable the car did not break. Had this feature been on the outcome may have been different.

Solutions

Looking at all the issues one might think that there is nothing we can do about it. In fact, there are actions we can take to help minimize these issues and mitigate them. The first and forth most action we can take is being aware of these issues. Once we recognize that there is an issue, one can take action to prevent it.

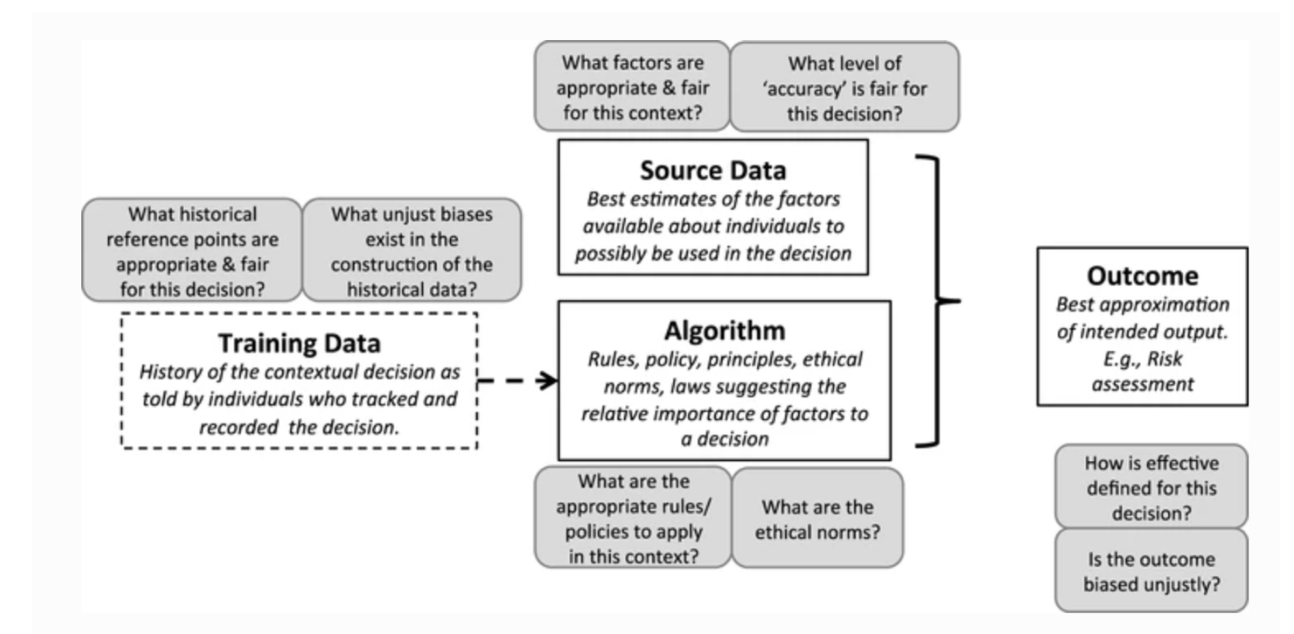

If we think of a company’s process as a system where information flows through. When looking at a system its up to the company to decide what parts of the system uses AI and what parts are done by humans. (Martin, K.) There are some cases where its suitable for AI to do a task and some cases where a human might be more suitable. Martin made an excellent decision-making graph in his article “Ethical Implications and Accountability of Algorithms”. This can help make such decisions. (See graph below)

(Martin, K.)

Conclusion

There are many ethical issues in AI algorithms and examples of these issues. Knowing what these issues are is the first step into solving and avoiding these ethical issues. The ethical issues examined where bias, Transparency and Accountability.

Bias occurs when an algorithm treats a group of people differently than another group. This is an issue because it leads to unfairness in our society and certain demographics having an advantage because of their age, race, or gender. We saw this issue being played out in the case of amazon hiring algorithm. The algorithm gave men an unfair advantage over women when it was rating their resumes. Another example was the Ewert vs Canada case in which a judiciary algorithm that gave people a rating on how likely they were to recommit a crime was treating indigenous people unfairly. Although both of these cases have a good ending, amazon stops using the algorithms and Ewert wins the case, it could have been avoided if they were aware of the bias in the algorithm.

The issue of transparency occurs when an AI algorithm gives answers but cannot give a reason to why it does. This can occur when complex machine learning algorithms are used such as neural networks. It may be hard (but not impossible) to get an answer as to why it chose a value. An example of this issues was when the COMPAS algorithm was used to judge inmates, but it didn’t give a reason as to why it gave them the rating that it did. The EU is trying to fix this issue. In their efforts they released guidelines around using AI algorithms. They tested these guidelines with companies to see if they where viable.

When an AI algorithm makes mistakes, who is to be held accountable. This is a murky and unclear issue as there is little legislation and rules around it. Looking at the case of uber because of the mistake that their driver made the person supervising the self-driving car was held liable. In the case of Ewert vs Canada the Correctional Service of Canada was held accountable for their mistakes in the algorithm they were using to judge people.

Overall, AI as many amazing uses but we shouldn’t not brush over the ethical issues surrounding AI algorithms. As we saw if we ignore these issues it can lead to the unfair treatment of people that is unacceptable in society. Being aware if these issues is the first step to better, fairer AI algorithms.

References

- Bostrom, Nick and Eliezer Yudkowsky. “Ethics in Machine Learning and Other Domain-Specific AI Algorithms.” (2013).

- Siau, Keng & Wang, Weiyu. (2018). Ethical and Moral Issues with AI.

- Wakabayashi, Daisuke. “Self-Driving Uber Car Kills Pedestrian in Arizona, Where Robots Roam.” The New York Times, The New York Times, 19 Mar. 2018, www.nytimes.com/2018/03/19/technology/uber-driverless-fatality.html.

- McFarland, Matt. “Uber Self-Driving Car Operator Charged in Pedestrian Death.” CNN, Cable News Network, 18 Sept. 2020, www.cnn.com/2020/09/18/cars/uber-vasquez-charged/index.html.

- Dastin, Jeffrey. “Amazon Scraps Secret AI Recruiting Tool That Showed Bias against Women.” Reuters, Thomson Reuters, 10 Oct. 2018, www.reuters.com/article/us-amazon-com-jobs-automation-insight-idUSKCN1MK08G.

- Bogen, Miranda. “All the Ways Hiring Algorithms Can Introduce Bias.” Harvard Business Review, 15 Oct. 2019, hbr.org/2019/05/all-the-ways-hiring-algorithms-can-introduce-bias.

- Martin, K. Ethical Implications and Accountability of Algorithms. J Bus Ethics 160, 835–850 (2019). https://doi.org/10.1007/s10551-018-3921-3

- Ali, Muhammad, et al. "Discrimination through optimization: How Facebook's ad delivery can lead to skewed outcomes." arXiv preprint arXiv:1904.02095 (2019).

- Qing Rao and Jelena Frtunikj. 2018. Deep learning for self-driving cars: chances and challenges. In Proceedings of the 1st International Workshop on Software Engineering for AI in Autonomous Systems (SEFAIS '18). Association for Computing Machinery, New York, NY, USA, 35–38. DOI:https://doi.org/10.1145/3194085.3194087

- Burt, Andrew. “The AI Transparency Paradox.” Harvard Business Review, 13 Dec. 2019, hbr.org/2019/12/the-ai-transparency-paradox. Larsson, Stefan and Fredrik Heintz. "Transparency in artificial intelligence". Internet Policy Review 9.2 (2020). Web. 8 Nov. 2020.

- “Ethics Guidelines for Trustworthy AI.” Shaping Europe's Digital Future - European Commission, 9 July 2020, ec.europa.eu/digital-single-market/en/news/ethics-guidelines-trustworthy-ai.

- Gutierrez, Daniel. “AI Black Box Horror Stories – When Transparency Was Needed More than Ever.” Open Data Science - Your News Source for AI, Machine Learning & More, 26 Dec. 2019, opendatascience.com/ai-black-box-horror-stories-when-transparency-was-needed-more-than-ever/.

- Stotler, Laura. “AI in Healthcare: Who's to Blame When Things Go Wrong.” Future of Work News, 13 Mar. 2020, www.futureofworknews.com/topics/futureofwork/articles/444789-ai-healthcare-whos-blame-when-things-go-wrong.htm.

- Thompson, Kirsten. “Use of AI Algorithm Triggers Lawsuit and Countersuit.” Dentons Data, 9 June 2020, www.dentonsdata.com/use-of-ai-algorithm-triggers-lawsuit-and-countersuit/.

- Beardsworth, Thomas. “Who to Sue When a Robot Loses Your Fortune.” BloombergQuint, 7 May 2019, www.bloombergquint.com/technology/who-to-sue-when-a-robot-loses-your-fortune.

- Derek B. Johnson. Mar 30, 2020. “Court: Algorithmic Bias Research Doesn't Count as Hacking.” FCW, fcw.com/articles/2020/03/30/algo-research-cfaa-ruling.aspx.

- Amy Matychuk, “Eighteen Years of Inmate Litigation Culminates with Some Success in the SCC’s Ewert v Canada” (26 June, 2018), online: ABlawg, http://ablawg.ca/wp-content/uploads/2018/06/Blog\_AM\_EwertSCC.pdf.

- Beatson, Jesse. “Ewert v Canada: Improving Prison Processes for Indigenous Peoples?” TheCourt.ca, 30 Oct. 2018,

Read more

Achieving Consistent Output from ChatGPT

Logan Anderson

Does a Math Degree Help in Software Engineering?

Logan Anderson